Agentic AI vs Copilot-Style Assistants: What Actually Changes?

What does “agentic” really mean in practice?

In a coding context, “agentic” refers to systems that can pursue a goal with partial autonomy. Instead of responding only to a single prompt, the assistant can decide what to do next based on the current state of the codebase and the feedback it receives.

Practically, this means the tool is not limited to generating code snippets. It can explore the repository, understand file relationships, edit multiple files, run tests or build commands, observe failures, and attempt fixes until the task is complete. The developer does not guide every micro step. They define the objective and supervise the outcome.

This is why many modern tools describe an “agent mode” rather than just smarter autocomplete. The assistant is no longer reacting only to the cursor position. It is reacting to the state of the project.

The real shift: from assistance to delegation

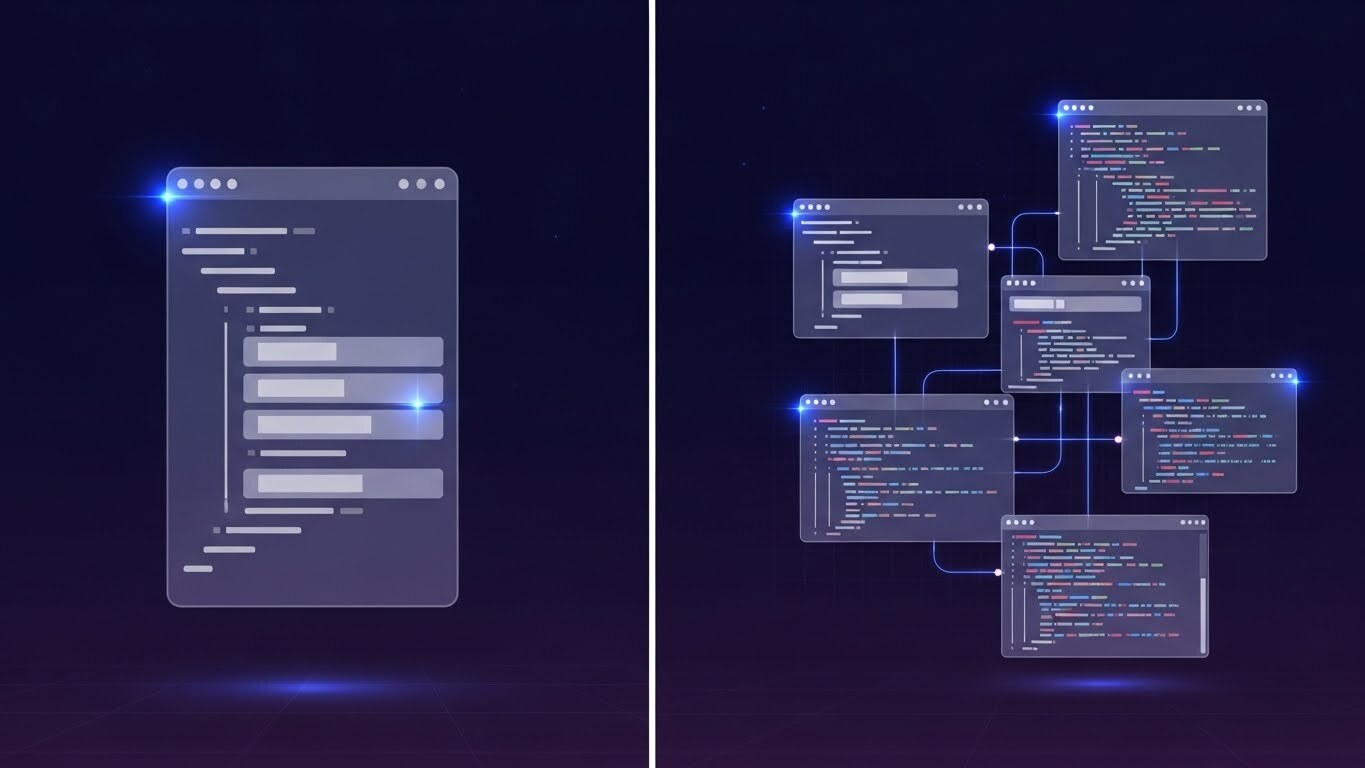

Copilot-style assistants are optimized for immediacy. You ask for help while typing, and the tool responds with something useful in that exact moment. This fits naturally into traditional pair-programming workflows.

Agentic tools, by contrast, are optimized for delegation. You are not asking “how do I write this function?” You are saying “make this change happen, and tell me when it works.”

This distinction matters because it changes who owns sequencing and coordination. With Copilot-style tools, the developer decides the order of operations. With agentic AI, the assistant can decide how to break the task down, while the human focuses on intent, constraints, and review.

What actually changes in day-to-day work?

The difference is not only about power. It is about where responsibility lives during execution.

How workflows change when agents enter the IDE

One of the first changes teams notice is how tasks are defined. With Copilot-style tools, work naturally gets broken into small prompts because the assistant cannot move far without human input. Agentic tools encourage larger, outcome-based requests, which means constraints must be clearer upfront.

Instead of prompting repeatedly, developers need to specify things like which directories are in scope, which commands define success, and which areas of the codebase must not be touched. The quality of the initial instruction matters more than clever phrasing.

Another shift happens during review. Agentic output often arrives as a larger diff. Reviewing that diff feels less like checking syntax and more like reviewing a junior engineer’s pull request. You evaluate architecture, boundaries, side effects, and test quality, not just correctness.

Finally, the terminal becomes part of the AI loop. Agentic tools frequently rely on running tests, builds, or linters and reacting to failures. If your local or CI environment is slow, flaky, or poorly documented, agentic workflows degrade quickly.

Where Copilot-style assistants still shine

Copilot-style assistance remains the better choice when work requires constant human judgment and tight control. This includes exploratory coding, learning an unfamiliar codebase, or implementing sensitive logic where every decision needs deliberate consideration.

It is also a better fit for highly interactive sessions where the developer wants to think out loud, ask questions, and adjust direction frequently. In those moments, autonomy adds friction instead of removing it.

Think of Copilot-style tools as precision instruments. They help you think and type faster, but they never take the wheel.

Where agentic AI creates real leverage

Agentic AI becomes compelling when tasks are clearly defined and easy to validate. Refactors, dependency upgrades, test generation, documentation updates, and repetitive cleanup work are strong candidates.

In these cases, the value is not that the AI writes better code than a human. It is that it can execute a complete loop without stopping. Plan, change, test, fix, repeat. That removes a large amount of cognitive overhead from experienced developers and frees them to focus on design and decision-making.

What does not change, despite the hype

Agentic AI does not understand your business context automatically. It does not know which trade-offs matter unless you tell it. It also does not replace tests as the ultimate source of truth. Without strong validation, agentic tools can produce confident-looking results that hide subtle regressions.

Most importantly, review quality becomes more important, not less. As throughput increases, the cost of shallow reviews rises. Teams that succeed with agentic AI treat review as a first-class engineering skill.

Copilot-style assistants improve how fast you write code.

Agentic assistants change how work is structured. They move teams from step-by-step execution toward goal-based delegation, with humans acting as supervisors rather than typists.

That is why the difference feels fundamental. It is not just a better suggestion engine. It is a different way of organizing software work.